DELL PowerVault ME4024 – best practices

Volume Tier AffinityVolume Tier Affinity Feature

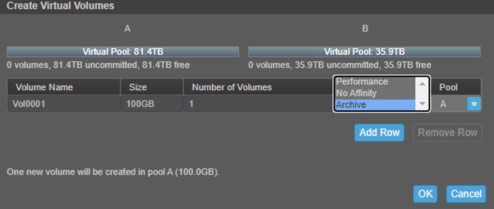

- No Affinity – The default strategy in Powervault ME4 is to prefer the highest spinning disk (non-SSD) tiers for new sequential writes and the highest tier available (including SSD) for new random writes. As data is later accessed by the host application it will be moved to the most appropriate tier based on demand with ‘hot’ data being promoted up towards the highest performance tier and ‘cold’ data being demoted downwards to the lower spinning disk-based tiers. This standard strategy will be followed for data on volumes set to ‘No Affinity’.

- Performance – For data on volumes set to the ‘Performance’ affinity the standard strategy will be followed for all new writes however subsequent access to that data will have a lower threshold for promotion upwards making it more likely for that data to be available on the higher performance tiers. Preferential treatment will be provided to ‘hot’ data that has performance affinity at the SSD tier making it more likely for archive or no affinity data to be demoted out of the SSD tier to make room. This is useful for volumes where you know the data will be in demand and want to ensure that it has priority treatment for promotion to and retention in your highest performance tier.

- Archive – For volumes that are set to the ‘Archive’ affinity all new writes will be initially placed in the archive tier so long as space is available – if no space is available they will be placed on the next higher tier available. Subsequent access to that data will allow for its promotion to the performance tiers as it becomes ‘hot’ however it will have a lower threshold for demotion and will be moved out of the highest performance SSD tier if there is a need to promote ‘hot’ data up from a lower tier.

Powering on with expand array

- Drive enclosures first – Ensures that the disks in the drive enclosure have enough time to completely spin up before being scanned by the controller modules within the controller enclosure. The LEDs blink while the enclosures power up. After the LEDs stop blinking – if the LEDs on the front and back of the enclosure are not amber – the power-on sequence is complete, and no faults have been detected.

- Controller enclosure next – Depending upon the number and type of disks in the system, it may take several minutes for the system to become ready.

- Data host last – (if powered off for maintenance purposes). When powering off, reverse the order of steps that are used for powering on.

VMware SATP Claim Rule

Modifying the SATP claim rule is advantageous because it will apply to all current and future datastores that are added to the ESXi host, but it requires a reboot to be applied. Once the rule is created and a reboot occurs, all current, and future datastores will have the recommended setting applied to them. To automatically set multipathing to round robin, and set the IOPS path change condition for all current and future volumes mapped to an ESXi host, create a claim rule with the following command:

esxcli storage nmp satp rule add -V "DellEMC" -M "ME4" -s "VMW_SATP_ALUA" -P "VMW_PSP_RR" -O "iops=3" -c="tpgs_on"

esxcli storage core claimrule load

A reboot is required for claim rule changes to take effect.

Thanks for that

When you add that rule what do you see when you list the device??

Does it show as policy=iops;iops=3

or

policy=rr;iops=3